Apache ZooKeeper (latest version 3.5.0 ) is an open-source distributed coordination system for maintaining centralize configuration information, naming service, providing distributed synchronization that was originally developed at Yahoo and written in Java. Back in 2006, Google published a paper on “Chubby“, ZooKeeper, not surprisingly, is a close clone of Chubby.

Coordination services of a distributed system:

- Name service— A name service is a service that maps a name to some information associated with that name. A telephone directory is a name service that maps the name of a person to his/her telephone number. In the same way, a DNS service is a name service that maps a domain name to an IP address. In our distributed system, we may want to keep a track of which servers or services are up and running and look up their status by name.

- Locking— To allow for serialized access to a shared resource in our distributed system, we may need to implement distributed mutexes. ZooKeeper provides for an easy way for you to implement them. See the algorithm below.

- Synchronization— Hand in hand with distributed mutexes is the need for synchronizing access to shared resources. ZooKeeper provides a simple interface to implement that.

- Configuration management— We can use ZooKeeper to centrally store and manage the configuration of your distributed system. This means that any new nodes joining will pick up the up-to-date centralized configuration from ZooKeeper as soon as they join the system. This also allows you to centrally change the state of your distributed system by changing the centralized configuration through one of the ZooKeeper clients.

- Leader election— Our distributed system may have to deal with the problem of nodes going down, and you may want to implement an automatic fail-over strategy. ZooKeeper provides off-the-shelf support for doing so via leader election. See the algorithm below.

If you’ve ever managed a database cluster with just 10 servers, you know there’s a need for centralized management of the entire cluster in terms of name services, group services, synchronization services, configuration management, and more. All of these coordination services you can develop from scratch which is error prone or use ZooKeeper instead.

Note: Apache Kafka, Storm, HBase, Hadoop 2.0 are few distributed systems already uses ZooKeeper as their coordination service.

Distributed Challenges:

- Race condition

- Deadlock

- Partial failures – When one application send a message to another application and then network dies before you get response. so how the first application knows whether the message has been delivered or has it been processed or it has been failed.

- Inconsistency – Follower may lag behind leader means follower may not have recent updated information which leader has.

ZooKeeper Goals:

- Serialization

- Reliability

- Atomicity – Either all success or whole fail.

- Fast – It stores all data into memory.

ZooKeeper Session:

- Tick time

- Session timeout

- Automatic failover – When client connect to ZooKeeper, ZooKeeper will start a session. If you connect to server1 and server1 dies then ZooKeeper will migrate the session to server2 with same session id and same session data.

ZooKeeper Watcher:

- Watchers are listeners which register with ZooKeeper for listening on an event.

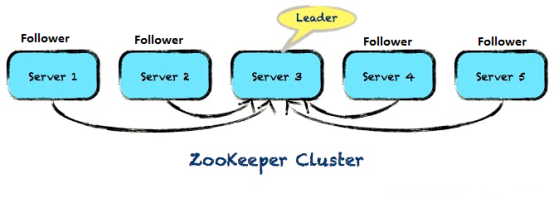

ZooKeeper Cluster (Called Ensemble):

- Leader

- Followers – Followers should synchronized with leader. followers can lag behind leader.

- Observer – Address write performance in voting mechanism. See Note in Data Consistency section.

Figure-1: ZooKeeper Ensemble

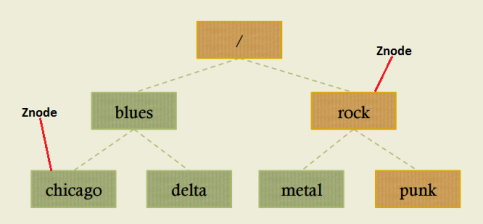

ZooKeeper Data Model (in memory):

- Tree like hierarchical filesystem

- Nodes in the tree are called Znodes

- Znode – A Znode may contain data and/or other child Znodes.

- All servers keep a copy of the same data model in memory

- All servers also holds a persistent copy on disk.

Figure-2: Data Model with UNIX-style paths (e.g. /rock/punk)

Note: ZooKeeper manages coordination data. It is not designed to hold large data. ZooKeeper client and server make sure that Znodes have less than 1M of data.

Data Consistency in ZooKeeper:

- Sequential updates – Update requests goes in order.

- Durability (of writes – persists)

- Atomicity – All or nothing

Note:

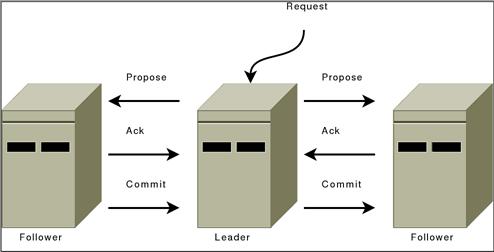

- Whenever a change is made, it is not considered successful until it has been written to a quorum (if you have 3 machines in the cluster then if 2 machines written successfully then the changes will be committed) of the servers in the ensemble

- There is a drawback in quorum based voting. This architecture makes it hard to scale out to huge numbers of clients. The problem is that as we add more voting members (ZooKeeper nodes), the write performance drops. This is due to the fact that a write operation requires the agreement of (in general) at least half the nodes in an ensemble and therefore the cost of a vote can increase significantly as more voters are added. ZooKeeper introduced a new type of ZooKeeper node called an Observer which helps address this problem.

Znode Types:

- Persistence – Exists till explicitly deleted.

- Ephemeral – Die when session expires.

- Sequential – Sequential Znode names are automatically assigned a sequence number suffix, applies to both persistence and ephemeral.

Note: An easy way of doing leader election with ZooKeeper is to let every server publish its information in a zNode that is both sequential and ephemeral. Then, whichever server has the lowest sequential zNode (old member) is the leader.

Znode Operations:

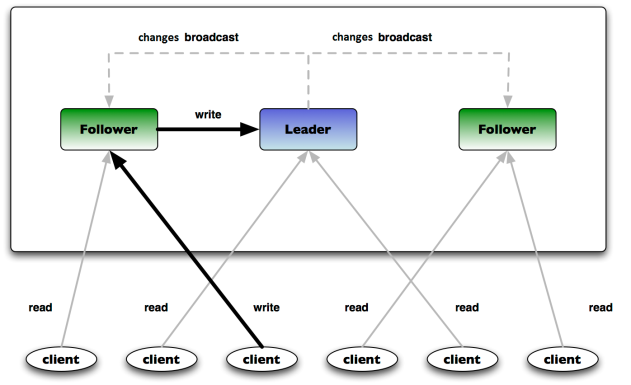

Client can connect and read from any ZooKeeper server, writes go through the leader

- create (write operation)

- delete (write operation)

- exists (read operation)

- getChildren (read operation)

- getData (read operation)

- setData (write operation)

- getACL (read operation)

- setACL (write operation)

- sync (read operation)

Figure-3: Read/Write Operation

Figure-4: ZAB Protocol (Changes Broadcast)

Note:

- CRUD data operations in ZooKeeper means cluster node operation: Create data (add cluster node), Read data (access cluster node), Update data (update cluster node) and Delete data (remove cluster node) in ZooKeeper.

- ZooKeeper doesn’t permit znodes that have children to be deleted by calling delete() method, so we first need to delete all the children, and then delete the group (parent) Znode.

- ZooKeeper uses ZAB (ZooKeeper Atomic Broadcast) algorithm for it’s changes broadcast (write operation) from leader to followers.

- ZooKeeper uses ACLs (Access Control List) to control access to its Znodes (the data nodes of a ZooKeeper data tree). The ACL implementation is quite similar to UNIX file access permissions. ACL permissions supported are: CREATE, READ, WRITE, DELETE, ADMIN.

ZooKeeper Leader Election:

Leader election refers to the problem of selecting a leader among a group of nodes belonging to one logical cluster. This is a hard problem in the face of node crashes. With Zookeeper, the problem can be solved using the following algorithm:

- Create a permanent znode (/election/) in zookeeper.

- Each client in the group creates an ephemeral node with “sequence” flag set (/election/node_), this means node should be both ephemeral and sequential. The sequence flag ensures that Zookeeper appends a monotonically increasing number to the node name. For instance, the first client which creates the ephemeral node will have the node named /election/node_1 while the second client creates node /election/node_2

- After the ephemeral node creation, the client will fetch all the children nodes under /election. The client that created the node with smallest sequence is elected the leader. This is an unambiguous way of electing the leader.

- Each client will watch the presence of node with sequence value that is one less than its sequence. If the “watched” node gets deleted, the client will again repeat step 3 to check if it has become the leader.

- If all clients need to know of the current leader, they can subscribe to group notifications for the node “/election” and determine the current leader on their own.

ZooKeeper Locking Mechanism:

Zookeeper can be used to construct global locks. Here is the algorithm:

Acquiring a Lock:

- Create a permanent node “/lock” in ZooKeeper.

- Each client in the group creates an ephemeral node with “sequence” flag set (/lock/node_) by calling create() method. This means this node is type of both ephemeral and sequence.

- After the ephemeral node creation, the client will fetch all the children nodes under /lock directory by calling getChildren() method. The client that created the node with smallest sequence in the child list is said to be holding the lock.

- Each client will watch the presence of node with sequence value that is one less than its sequence(i.e if the current node’s sequence value is 7, then it will set a watch on a node whose sequence value is 7-1=6). If the “watched” node gets deleted, the client will again repeat step 3 to check if it is holding the lock.

Releasing a Lock:

- The client that holds the lock deletes the ephemeral node it created.

- The client which created the next highest sequence node will be notified and will hold the lock.

- If all clients need to know about the change of lock ownership, they can listen to group notifications for the node “/lock” and determine the current owner.

ZooKeeper Group Membership

In addition to the above higher order functions, Group membership is a “popular” ready-made feature available in Zookeeper. This function is achieved by exploiting the child notification mechanism. Here are the details:

- A permanent node (e.g (“/mygroup/”) represents the logical group node.

- Clients create ephemeral nodes under the group node to indicate membership.

- All the members of the group will subscribe to the group “/mygroup” thereby being aware of other members in the group.

- If the client shuts down (normally or abnormally), zookeeper guarantees that the ephemeral nodes corresponding to the client will automatically be removed and group members notified.

ZooKeeper Client API:

Client can work with ZooKeeper through command line or ZooKeeper API. ZooKeeper clients are basically cluster nodes. There are two client API libraries maintained by the ZooKeeper project, one in Java and another in C. With regard to other programming languages, some libraries have been made that wrap either the Java or the C client.

Practical usage of ZooKeeper in Kafka:

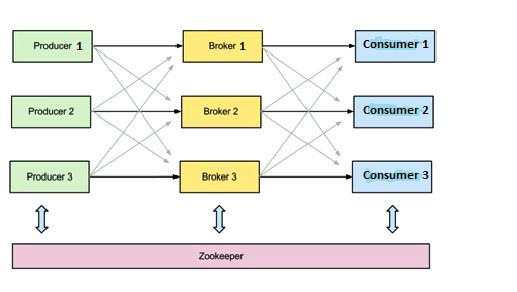

ZooKeeper is used for managing, coordinating Kafka broker. Each Kafka broker is coordinating with other Kafka brokers using ZooKeeper. Producer and consumer are notified by ZooKeeper service about the presence of new broker in Kafka system or failure of the broker in Kafka system. As per the notification received by the Zookeeper regarding presence or failure of the broker, producer and consumer takes decision and start coordinating its work with some other broker. This is shown below in Figure-5 below.

ZooKeeper source code:

https://svn.apache.org/repos/asf/zookeeper/trunk/

Note: Checkout from “trunk“. Most development is done on the “trunk”.