Download and Install Eclipse here.

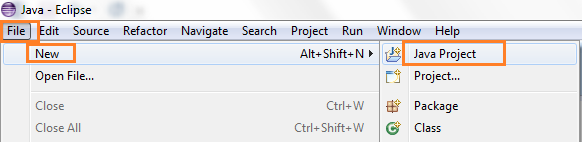

Create New Java Project

Add Hadoop Dependencies JARs

After downloading Hadoop here, add all jar files in lib folder. Right click on project properties and select Java build path

The Word count example

We’re going to create a simple word count example. Given a text file, one should be able to count all occurrences of each word in it. In general, the program consists of three classes:

- WordCountMapper.java the mapper.

- WordCountReducer.java the reducer.

- WordCountDriver.java the driver. Some configurations (input type, output type, job…) are done here.

WordCountMapper.java

package com.hadoop.sample;

import java.io.IOException;

import java.util.StringTokenizer;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapred.*;

public class WordCountMapper extends MapReduceBase implements

Mapper<LongWritable, Text, Text, IntWritable> {

// hadoop supported data types

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

// map method that performs the tokenizer job and framing the initial key value pairs

public void map(LongWritable key, Text value,

OutputCollector<Text, IntWritable> output, Reporter reporter)

throws IOException {

// taking one line at a time and tokenizing the same

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

// iterating through all the words available in that line and forming the key value pair

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

// sending to output collector which inturn passes the same to reducer

output.collect(word, one);

}

}

}

WordCountReducer.java

package com.hadoop.sample;

import java.io.IOException;

import java.util.Iterator;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapred.*;

public class WordCountReducer extends MapReduceBase implements

Reducer<Text, IntWritable, Text, IntWritable> {

/*

* reduce method accepts the Key Value pairs from mappers, do the

* aggregation based on keys and produce the final out put

*/

public void reduce(Text key, Iterator<IntWritable> values,

OutputCollector<Text, IntWritable> output, Reporter reporter)

throws IOException {

int sum = 0;

/*

* iterates through all the values available with a key and add them

* together and give the final result as the key and sum of its values

*/

while (values.hasNext()) {

sum += values.next().get();

}

output.collect(key, new IntWritable(sum));

}

}

WordCountDriver.java

package com.hadoop.sample;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.conf.*;

import org.apache.hadoop.io.*;

import org.apache.hadoop.mapred.*;

import org.apache.hadoop.util.*;

public class WordCountDriver extends Configured implements Tool {

public int run(String[] args) throws Exception {

// creating a JobConf object and assigning a job name for identification purposes

JobConf conf = new JobConf(getConf(), WordCountDriver.class);

conf.setJobName("WordCount");

// Setting configuration object with the Data Type of output Key and Value

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

// Providing the mapper and reducer class names

conf.setMapperClass(WordCountMapper.class);

conf.setReducerClass(WordCountReducer.class);

// the hdfs input and output directory to be fetched from the command line

FileInputFormat.addInputPath(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

JobClient.runJob(conf);

return 0;

}

public static void main(String[] args) throws Exception {

int res = ToolRunner.run(new Configuration(), new WordCountDriver(), args);

System.exit(res);

}

}

When all finished, you should end up with something like this:

Export the project to a JAR file (samplehadoop.jar). Right click on the project and select “Export”

Follow the steps to execute the job in HadoopNameNode

Copy the samplehadoop.jar and PLACES.TXT (copy the downloaded file content from places-txt.docx to PLACES.TXT file) input file from windows to a location in linux LFS (Local File System) through FileZilla (/home/ubuntu/wordcount/)

Create an input directory in HDFS (Hadoop Distributed File System)

| $ hadoop fs -mkdir /home/ubuntu/wordcount/input/ |

Copy the input file from linux LFS to HDFS

| $ hadoop fs -copyFromLocal /home/ubuntu/wordcount/PLACES.TXT /home/ubuntu/wordcount/input/ |

Execute the jar

| $ hadoop jar /home/ubuntu/wordcount/samplehadoop.jar com.hadoop.sample.WordCountDriver /home/ubuntu/wordcount/input/ /home/ubuntu/wordcount/output/ |

Once the job shows a success status we can see the output file in the output directory (part-00000)

| $ hadoop fs -ls /home/ubuntu/wordcount/output/ |

For any further investigation of output file we can retrieve the data from HDFS to LFS and from there to the desired windows location

| $ hadoop fs -copyToLocal /home/ubuntu/wordcount/output/ /home/ubuntu/wordcount/ |

From linux LFS to the desired windows location

**DO NOT forget to terminate (Delete) all 4 EC2 instances which you have created.

Select all 4 EC2 instances and right click, select Instance State > Terminate

That’s it for this four series article, hope you find it useful and enjoyed.

Happy Hadoop Year!!

[…] Part 4 – We now turn into a sample Hadoop MapReduce word count example using Eclipse. […]

LikeLike

[…] Write a MapReduce Java program and bundle it in a JAR file. You can have a look in my previous post how to create a MapReduce program in Java using Eclipse and bundle a JAR file “First Example Project using Eclipse“. […]

LikeLike