In this post, I tried to show most of the Hive components and their dependencies from old Hive version to new Hive version. I made a single architecture diagram which may help you to visualize complete Hive overall architecture including common client interfaces. I tried to keep post contents very little other than a big diagram. So, it will help you to visualize instead of regular reading and forget (in my case 🙂 ). It included HiverServer1 and HiveServer2 as well. HiveServer2 is a rewrite of HiveServer1 (sometimes called HiveServer or Thrift Server) that addresses Multi-client concurrency and authentication problems which I will discuss later in this post, starting with Hive 0.11.0. Use of HiveServer2 is recommended.

hadoop

Data Warehouse: Classic Use Cases for Hadoop in DW

Enterprise Data Warehousing (EDW) has been a mainstay of many major corporations for the last 20 years. However, with the tremendous growth of data (doubling every two years), the enterprise data warehouses are exceeding their capacity too quickly. Load processing windows are similarly being maxed out, adversely affecting service and threatening the delivery of critical business insights. So it becomes very expensive for organisations to process and maintain large datasets.

How MapReduce Works

- Write a MapReduce Java program and bundle it in a JAR file. You can have a look in my previous post how to create a MapReduce program in Java using Eclipse and bundle a JAR file “First Example Project using Eclipse“.

- Client submit the job to the JobTracker by running the JAR file ($ hadoop jar ….). Actually the driver program (WordCountDriver.java) act as a client which will submit the job by calling “JobClient.runJob(conf);“. The program can run on any node (as a separate JVM) in the Hadoop cluster or outside cluster. In our example, we are running the client program on the same machine where JobTracker is running usually NameNode. The job submission steps includes:

Hadoop: MapReduce Vs Spark

Sometimes I came across a question “Is Apache Spark going to replace Hadoop MapReduce?“. It depends based on your use cases. Here I tried to explained features of Apache Spark and Hadoop MapReduce as data processing. I hope this blog post will help to answer some of your questions which might have coming to your mind these days.

Data Warehouse: Teradata Vs Hadoop

Teradata is a fully horizontal scalable relational database management system (RDBMS). In other words, Massively Parallel Processing (MPP) database systems based on a cluster of commodity hardware (computers) called “shared-nothing” nodes (each node has separate CPU, memory, and disks to process data locally) connected through a high-speed interconnect. Horizontal partitioning of relational tables, along with the parallel execution of SQL queries.

“Hadoop: The Definitive Guide” 3rd Edition

Apache Hadoop ecosystem, time to celebrate! The much-anticipated, significantly updated 3rd edition of Tom White’s classic book, Hadoop: The Definitive Guide, is freely available here for all my readers.

YARN : NextGen Hadoop Data Processing Framework

In this BigData world, massive data storage and faster processing is a big challenge. Hadoop is the solution to this challenge. Hadoop is an open-source software framework for storing and processing big data in a distributed fashion on large clusters (thousands of machines) of commodity (low cost) hardware. Hadoop has two core components, HDFS and MapReduce. HDFS (Hadoop Distributed File System) store massive data into commodity machines in a distributed manner. MapReduce is a distributed data processing framework to work with this massive data.

HDFS File Blocks Distribution in DataNodes

Background

When a file is written to HDFS, it is split up into big chucks called data blocks, whose size is controlled by the parameter dfs.block.size in the config file hdfs-site.xml (in my case – left as the default which is 64MB). Each block is stored on one or more nodes, controlled by the parameter dfs.replication in the same file (in most of this post – set to 3, which is the default). Each copy of a block is called a replica.

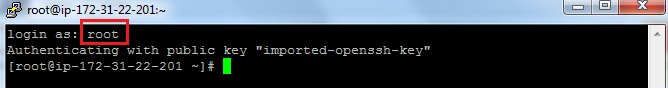

Setting up Hadoop Cluster on Amazon Cloud

I wanted to get familiar with the big data world, and decided to test Hadoop on Amazon Cloud. It was a really interesting and informative experience. The aim of this blog is to share my experience, thoughts and observations related to both practical and non-practical use of Apache Hadoop.

Overview

A typical Hadoop multi-node cluster